Provisioning Role-Based Access

How to provision Role-Based Access in S3

Setting up Role-Based Access for AWS S3

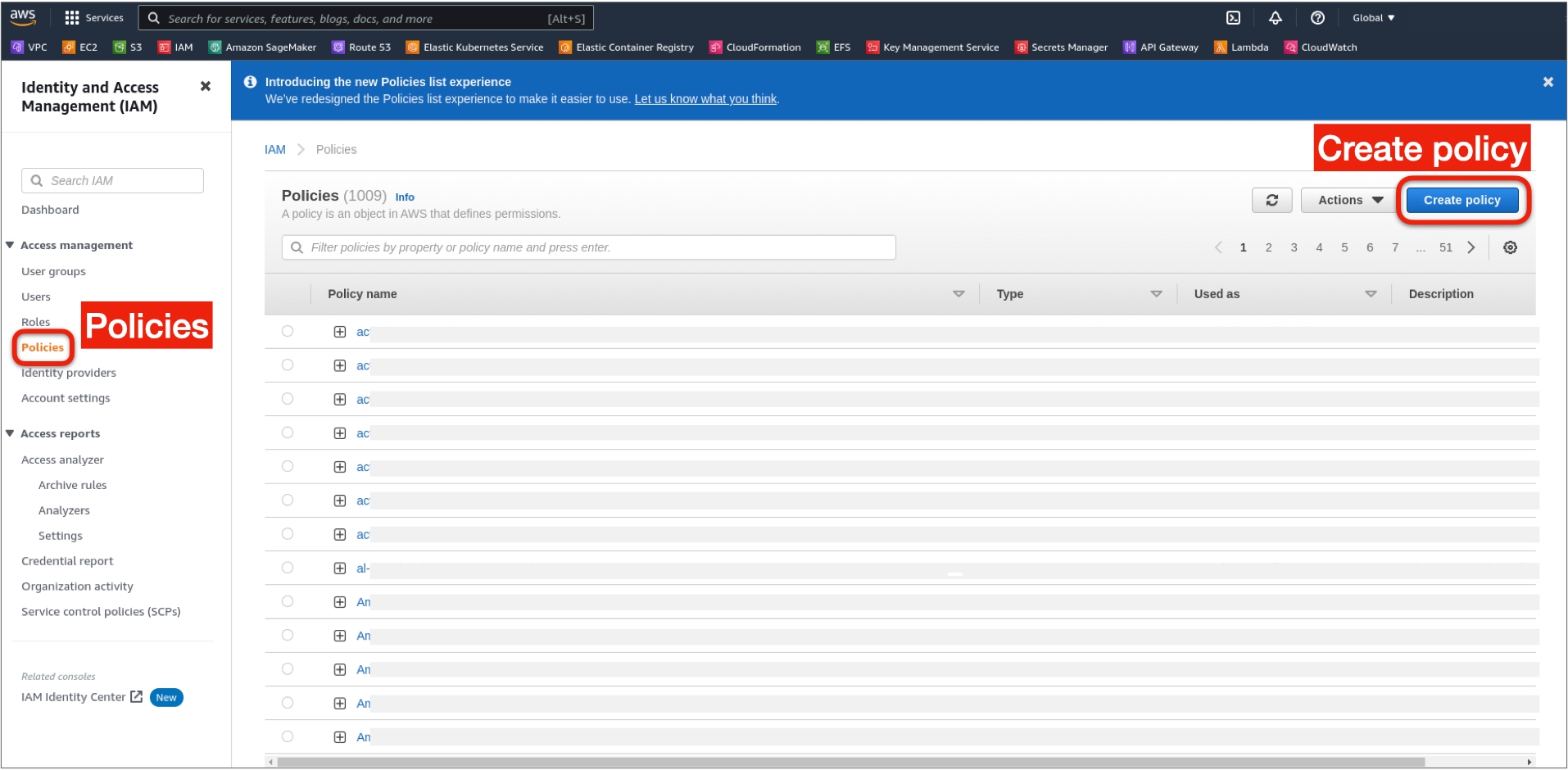

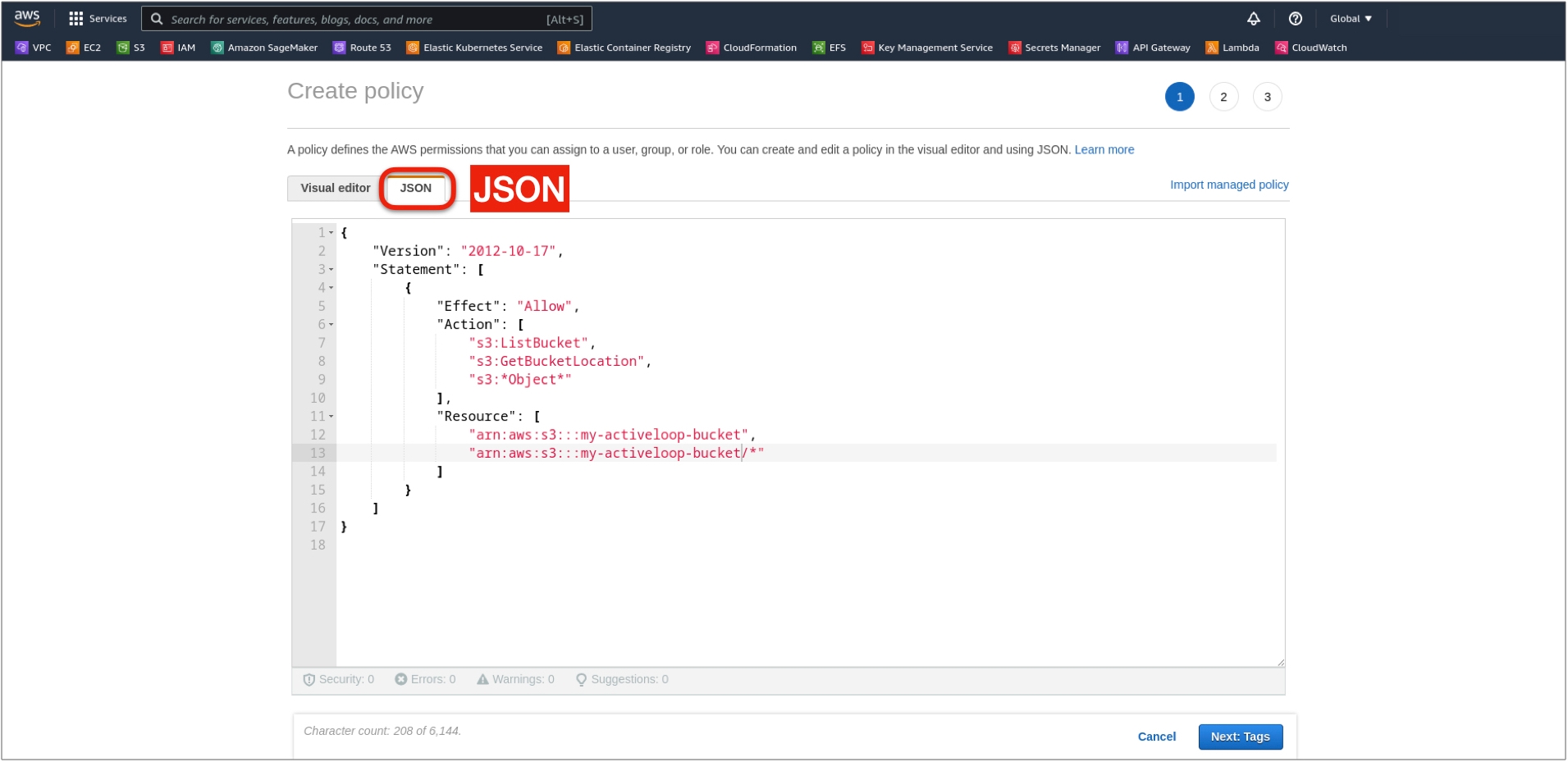

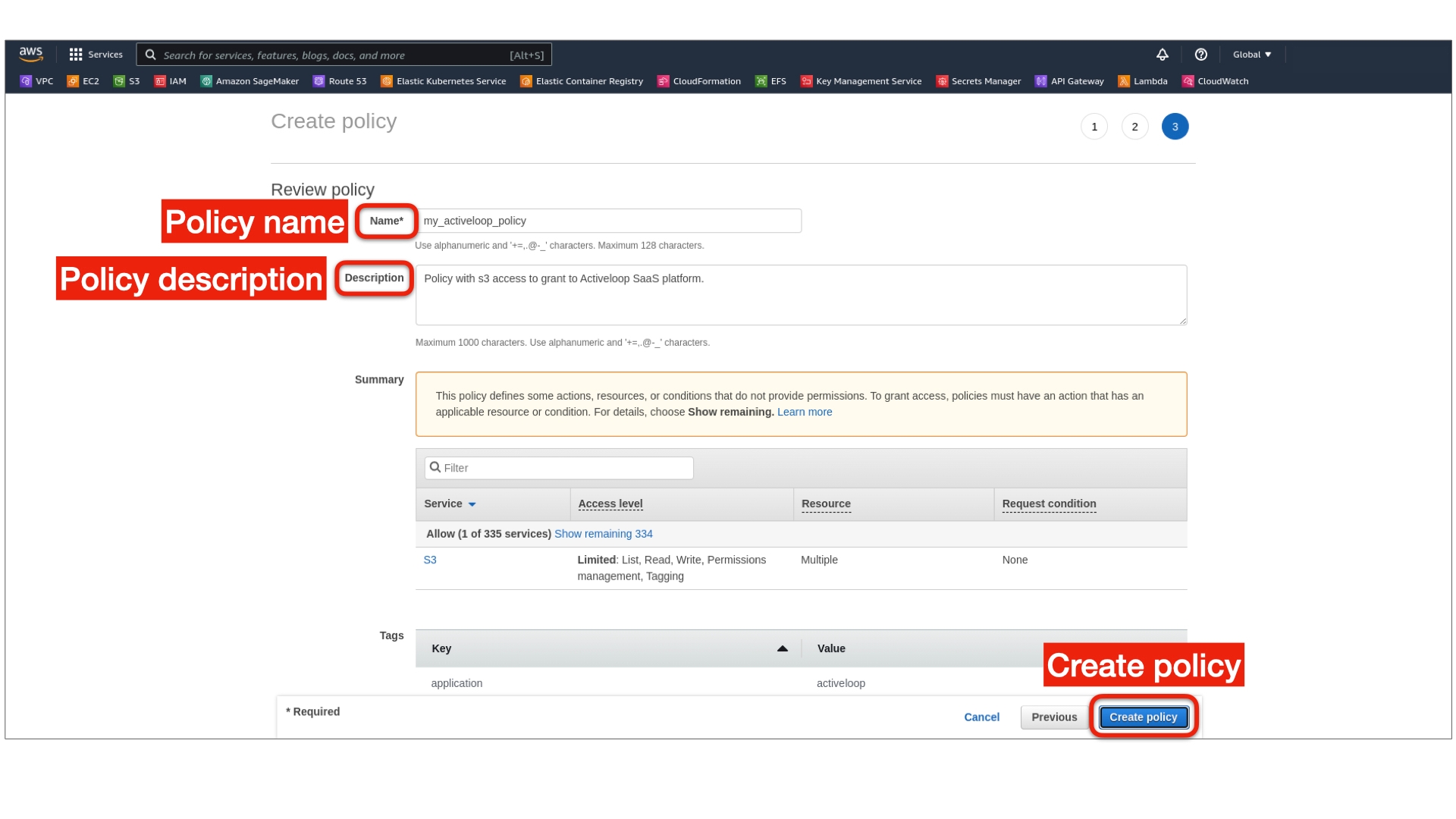

Step 1: Create the AWS IAM Policy

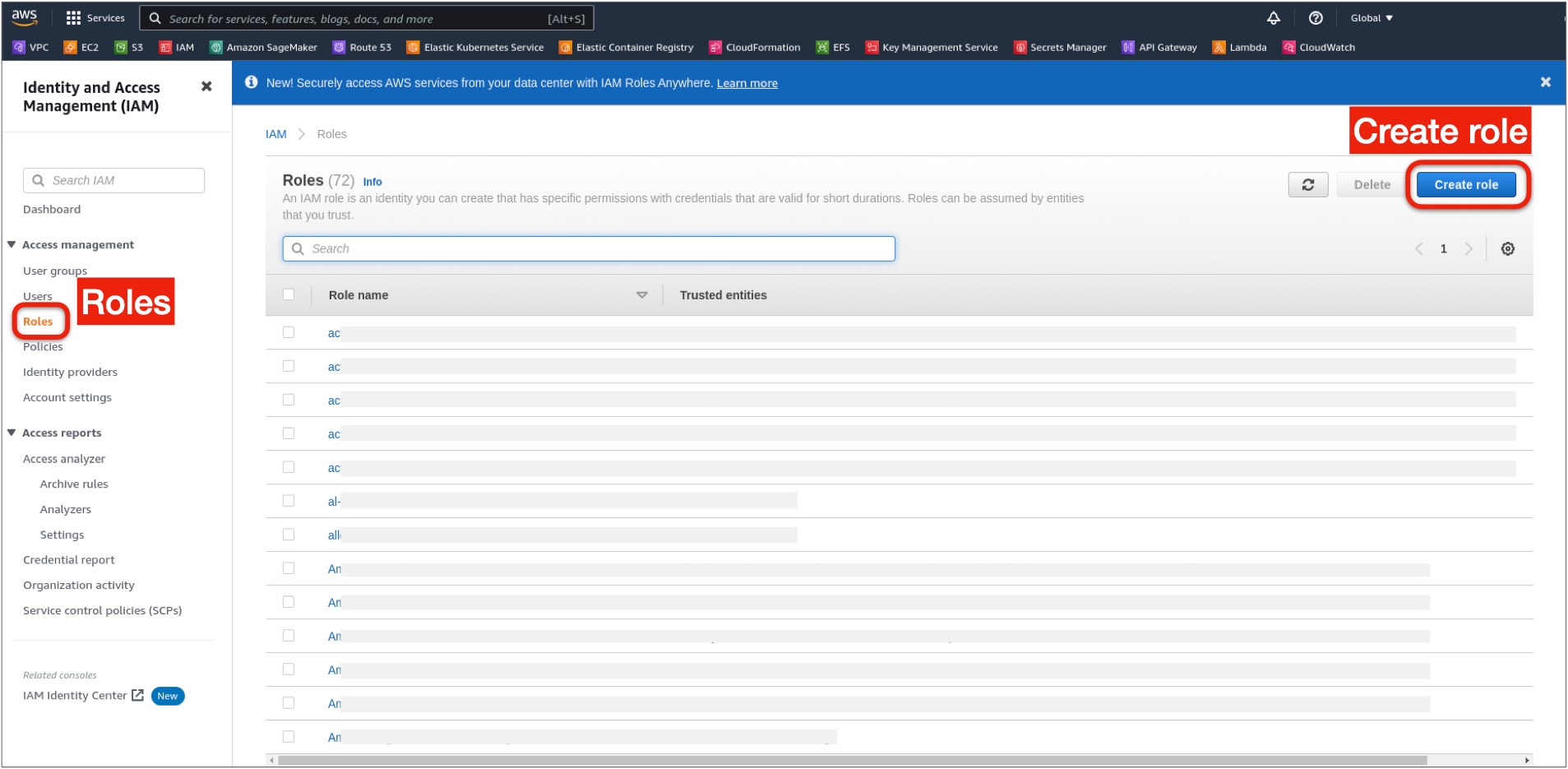

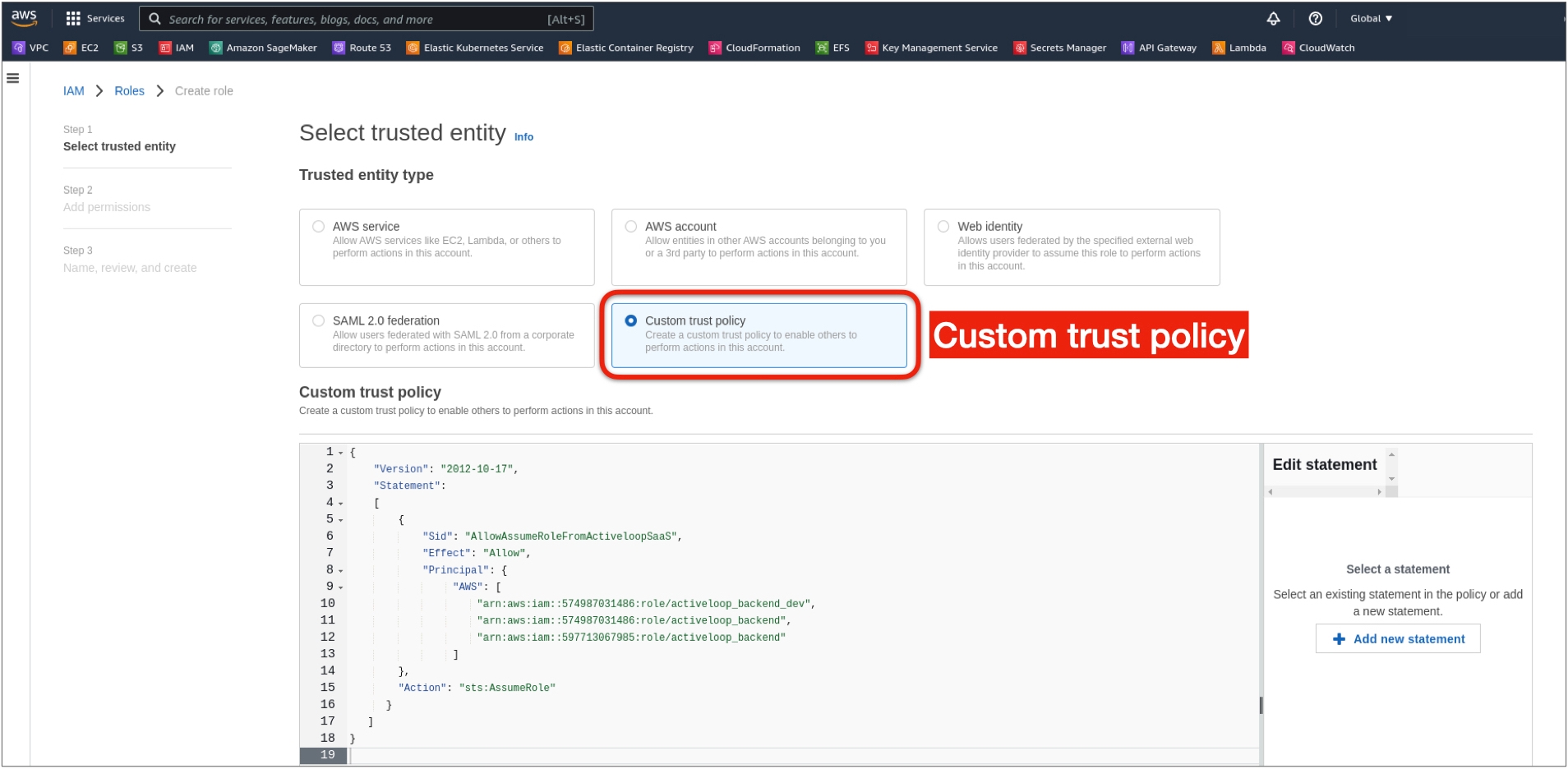

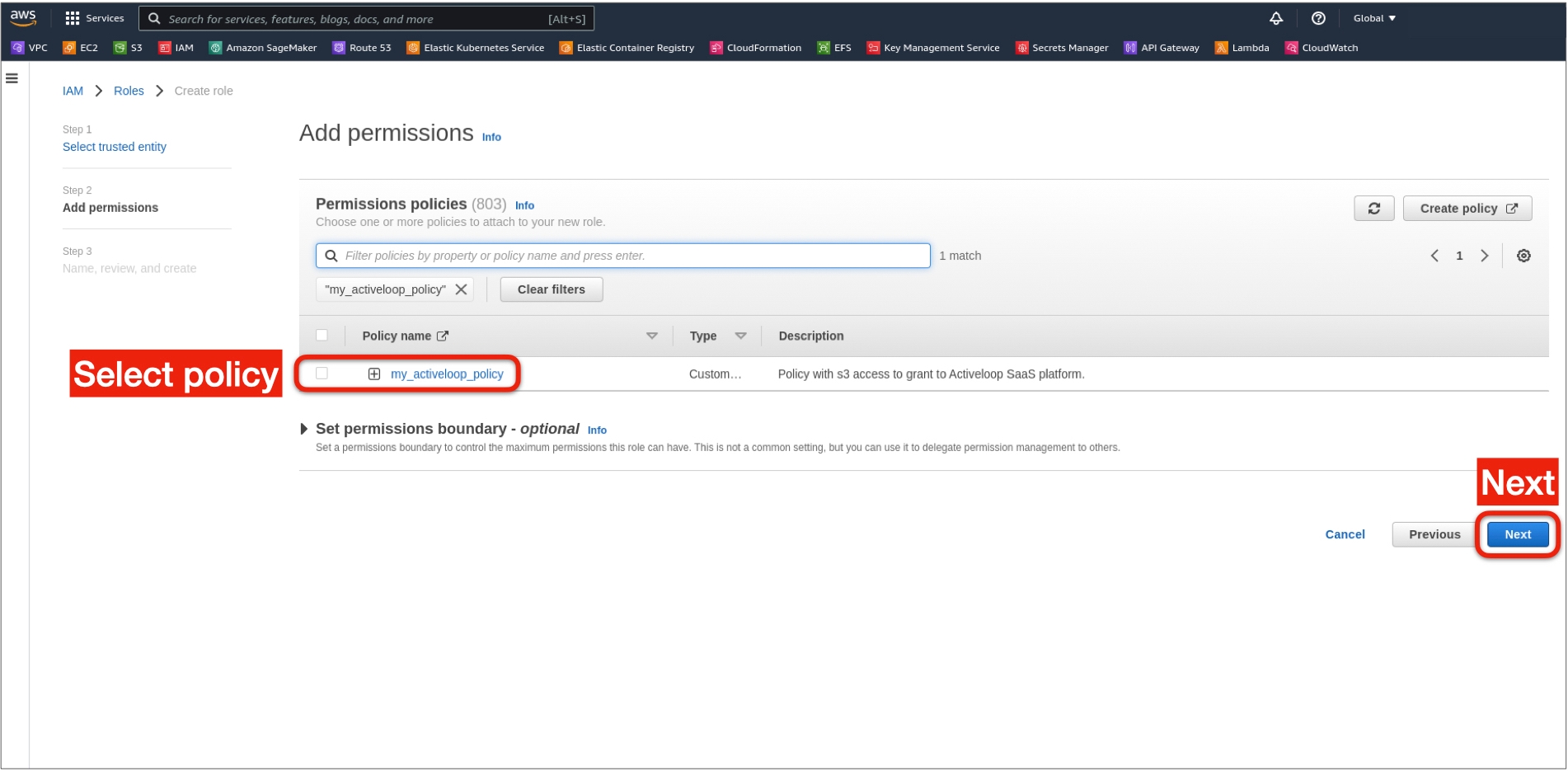

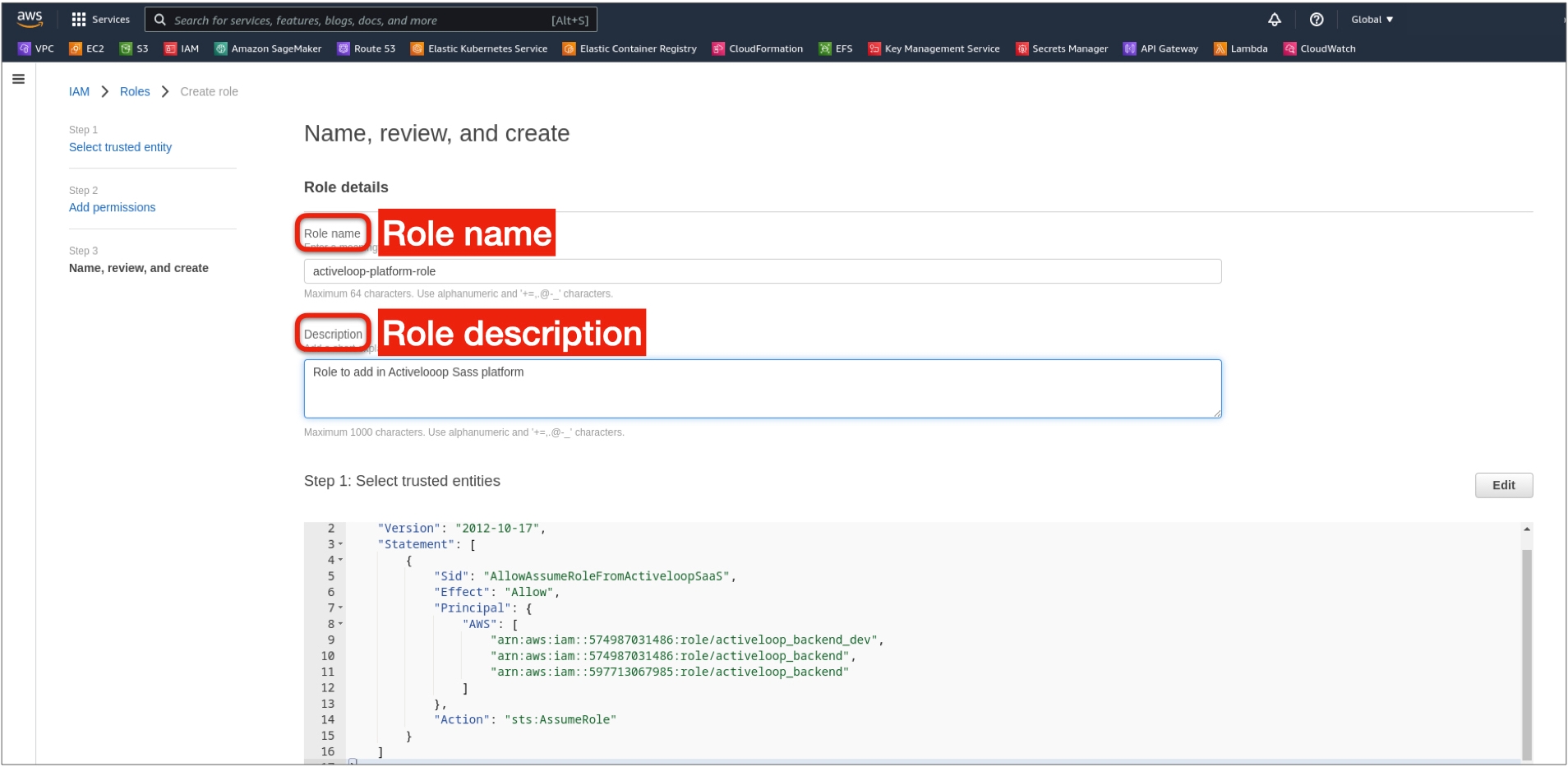

Step 2: Create the AWS IAM Role

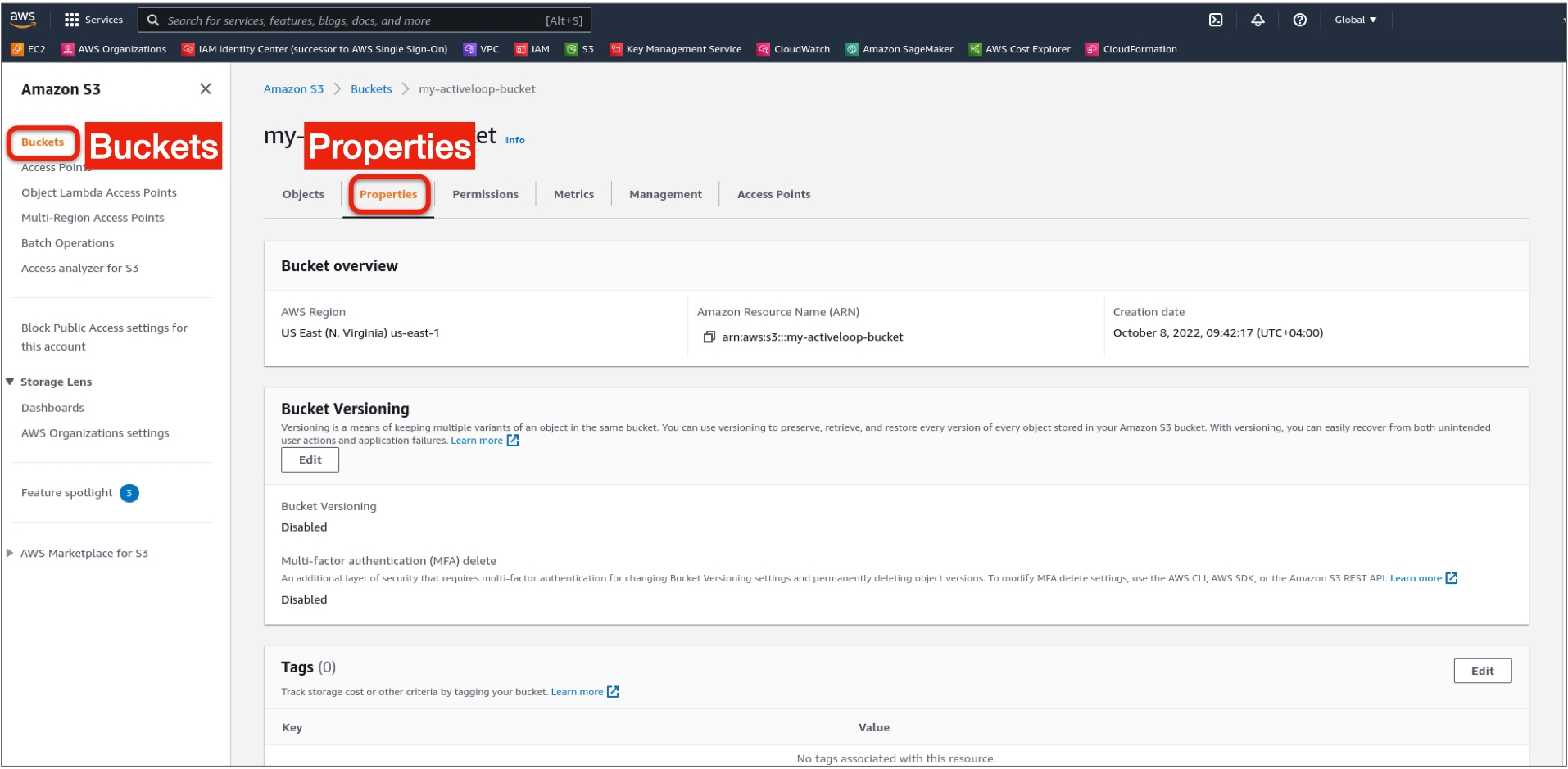

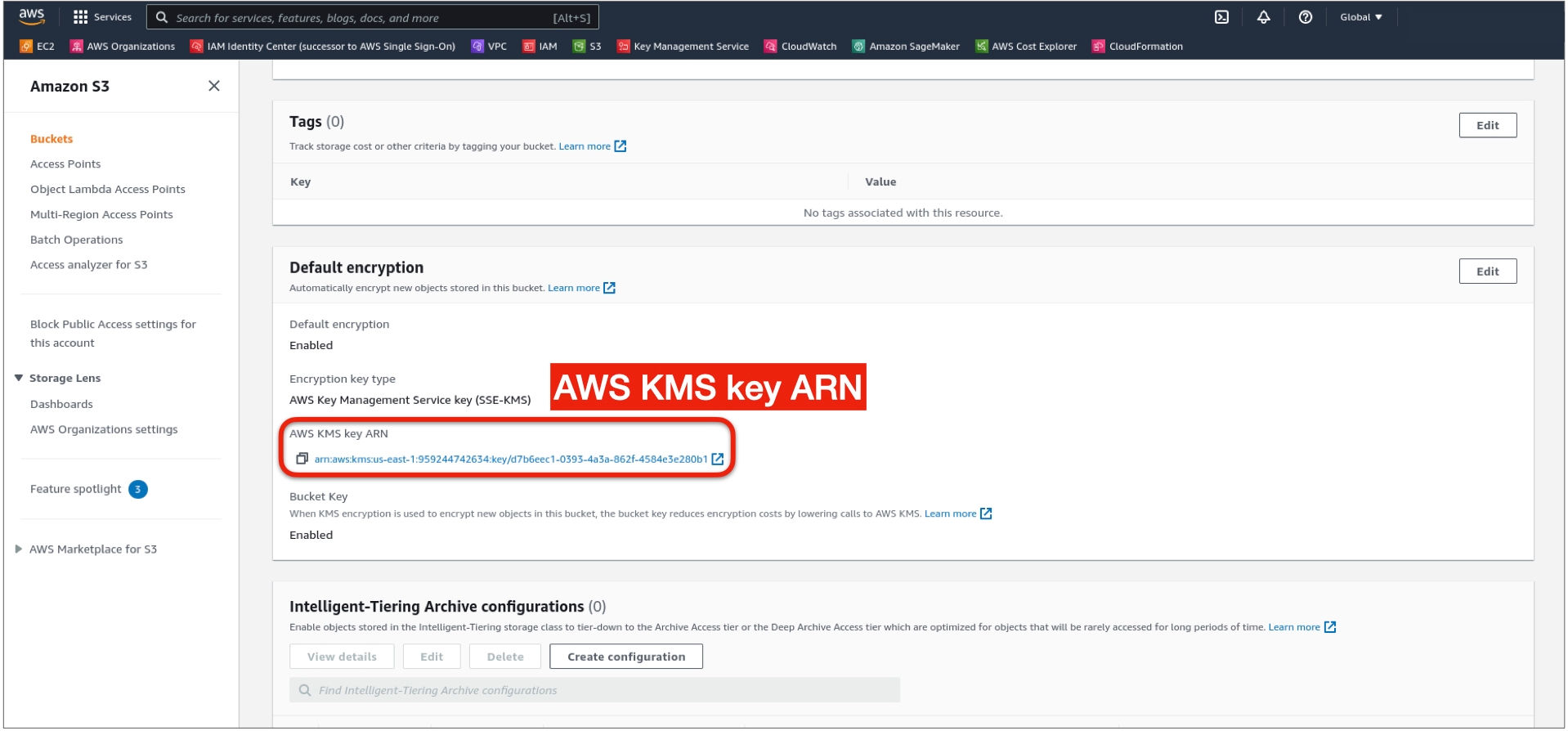

Step 3: Grant Access to AWS KMS Key (only for buckets that are encrypted with customer managed KMS keys)

Step 4: Enter the created AWS Role ARN (Step 2) into the Activeloop UI

Storing Deep Lake Data in Your Own CloudWas this helpful?