Creating Complex Datasets

Converting a multi-annotation dataset to Deep Lake format is helpful for understanding how to use Deep Lake with rich data.

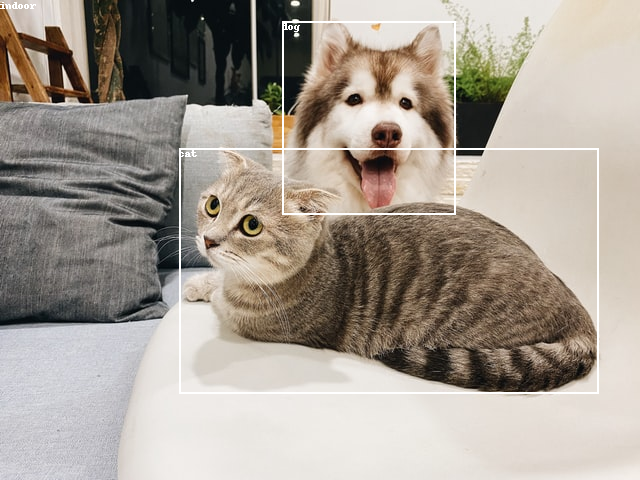

How to create datasets with multiple annotation types

This tutorial is also available as a Colab Notebook

Create the Deep Lake Dataset

data_dir

|_classification

|_indoor

|_image1.png

|_image2.png

|_outdoor

|_image3.png

|_image4.png

|_boxes

|_image1.txt

|_image3.txt

|_image3.txt

|_image4.txt

|_classes.txtInspect the Deep Lake Dataset

Was this helpful?