Creating Object Detection Datasets

Converting an object detection dataset to Deep Lake format is a great way to get started with datasets of increasing complexity.

How to convert a YOLO object detection dataset to Deep Lake format

This tutorial is also available as a Colab Notebook

Object detection using bounding boxes is one of the most common annotation types for Computer Vision datasets. This tutorial demonstrates how to convert an object detection dataset from YOLO format to Deep Lake, and a similar method can be used to convert object detection datasets from other formats such as COCO and PASCAL VOC.

Create the Deep Lake Dataset

The first step is to download the small dataset below called animals object detection.

The dataset has the following folder structure:

data_dir

|_images

|_image_1.jpg

|_image_2.jpg

|_image_3.jpg

|_image_4.jpg

|_boxes

|_image_1.txt

|_image_2.txt

|_image_3.txt

|_image_4.txt

|_classes.txtNow that you have the data, let's create a Deep Lake Dataset in the ./animals_od_deeplakefolder by running:

Next, let's specify the folder paths containing the images and annotations in the dataset. In YOLO format, images and annotations are typically matched using a common filename such as image -> filename.jpeg and annotation -> filename.txt . It's also helpful to create a list of all of the image files and the class names contained in the dataset.

Since annotations in YOLO are typically stored in text files, it's useful to write a helper function that parses the annotation file and returns numpy arrays with the bounding box coordinates and bounding box classes.

Finally, let's create the tensors and iterate through all the images in the dataset in order to upload the data in Deep Lake. Boxes and their labels will be stored in separate tensors, and for a given sample, the first axis of the boxes array corresponds to the first-and-only axis of the labels array (i.e. if there are 3 boxes in an image, the labels array is 3x1 and the boxes array is 3x4).

In order for Activeloop Platform to correctly visualize the labels, class_names must be a list of strings, where the numerical labels correspond to the index of the label in the list.

Inspect the Deep Lake Dataset

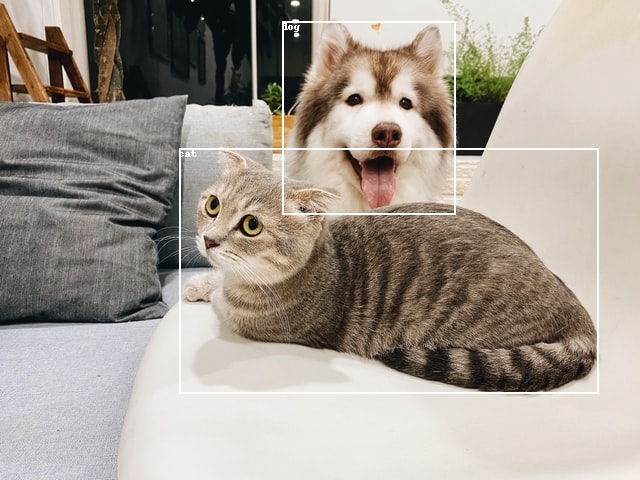

Let's check out the third sample from this dataset, which contains two bounding boxes.

Congrats! You just created a beautiful object detection dataset! 🎉

Was this helpful?