Training Reproducibility Using Deep Lake and Weights & Biases

How to achieve full reproducibility of model training using Deep Lake and W&B

How to achieve full reproducibility of model training by combining Deep Lake data lineage with W&B logging

Experiment tracking tools such as Weights & Biases (W&B) improve reproducibility of your machine learning experiments by offering logging of datasets, hyper parameters, source codes, and more. When running model training with W&B and Deep Lake, Deep Lake automatically pushes information required to reproduce the data such as the uri, commit_id, and view_id to the active W&B run. By fully logging the state of your dataset, model, and source code, you can achieve full reproducibility of model training run for datasets of any size.

This playbook demonstrates how to use Activeloop Deep Lake with Weights & Biases to:

Upload a Deep Lake dataset in a W&B run and create a W&B artifact

Query the dataset using Activeloop and save the query result in optimized format for training

Train an object detection model on the saved query result and log the training parameters in a W&B run

Re-train the model with adjusted parameters and use W&B to compare the different training runs.

Prerequisites

In addition to installation of commonly used packages in deep-learning, this playbook requires installation of:

!pip install deeplake

!pip install wandb

!pip install albumentationsThe required python imports are:

import deeplake

import albumentations as A

from albumentations.pytorch import ToTensorV2

import numpy as np

import torch

import wandb

import time

import sys

import math

import torchvision

from torchvision.models.detection.faster_rcnn import FastRCNNPredictorYou should also register with Activeloop and W&B, create API tokens for both tools, and log in to your W&B account via the CLI using:

Creating a W&B Artifact from a Deep Lake Dataset

While the most common use case W&B is to track training and evaluation jobs, you may also wrap your dataset upload jobs in a W&B run in order to create W&B artifacts. Any future runs that consume this dataset will also consume the corresponding W&B artifact. As a result, your Deep Lake datasets will be automatically tracked by W&B and can be visualized in the W&B artifacts lineage UI.

Any commit, copy or deepcopy operation inside a W&B run will create a W&B artifact. Here we emulate a dataset upload by copying a dataset that is already hosted by Activeloop.

You may replace dl-corp in the dataset path above with your own Deep Lake organization in order to run the code.

If we open the W&B page for the run above, we see that the datasets has been tracked that artifacts were created for both the training and validation datasets.

Train a model using the Deep Lake dataset

Suppose we are building a surveillance system to count and classify vehicles in small-to-medium size parking lots. The visdrone dataset is suitable starting point because it contains a variety of images of vehicles taken in cities using a UAV. However, many images are taken from very large viewing distances, thus resulting in small objects that are difficult to detect in object detection models, and are also not relevant to our surveillance application, like the image below.

Therefore, we filter out these images and train the model on a subset of the dataset that is more appropriate for our application.

Querying the dataset

Let's use the query engine on the Deep Lake UI to filter out samples that contain any cars with a width or height below 20 pixels. Since most images with vehicles contain cars, this query is a reliable proxy for imaging distance.

This query eliminates approximately 50% of the samples in the dataset.

The steps above can also be performed programmatically.

Training the model

Before training the model, which will often happen on a different machine than where the dataset was created, we first re-load the data for training and optimize if for streaming performance.

An object detection model can be trained using the same approach that is used for all Deep Lake datasets, with several examples in our tutorials.

When using subsets of datasets, it's advised to remap the input classes for model training. In this example, the source dataset has 12 classes, but we are only interested in 9 classes containing objects we want to localize in our parking lot (bicycle, car, van, truck, tricycle, awning-tricycle, bus, motor, others). Therefore, we remap the classes of interest to values 0,1,2,3,4,6,7,8 before feeding them into the model for training.

Later in this playbook, we will experiment with different transform resolutions, so we specify the transform resolution (WIDTH, HEIGHT), BATCH_SIZE, and minimum bounding box area for transformation (MIN_AREA).

All bounding boxes below MIN_AREA are ignored in the transformation and are not fed to the model

Next, let's specify an augmentation pipeline, which mostly utilizes Albumentations. We perform the remapping of the class labels inside the transformation function.

This playbook uses a pre-trained torchvision neural network from the torchvision.models module. We define helper functions for loading the model, training for 1 epoch (including W&B logging), and evaluating the model by computing the average IOU (intersection-over-union) for the bounding boxes.

Training is performed on a GPU if possible. Otherwise, it's on a CPU.

Let's initialize the model and optimizer.

Next, we initialize the W&B run and create the dataloaders for training and validation data. We will log the training loss and validation IOU, as well as other parameters like the transform resolution.

Creation of the Deep Lake dataloaders is a trigger for the W&B run to log Deep Lake-related information. Therefore, the the W&B run should be initialized before dataloader creation.

The model and data are ready for training 🚀!

Let's train the model for 8 epochs and save the gradients, parameters, and final trained model as an artifact.

In the W&B UI for this run, we see that in addition to the metrics and parameters that are typically logged by W&B, the Deep Lake integration also logged the dataset uri, commit_id, and view_id for the training and evaluation data, which uniquely identifies all the data that was used in this training project.

The Deep Lake integration with W&B logs the dataset uri, commit_id, and view_id in a training run even if a W&B artifact was not created for the Deep Lake dataset.

Improving the Model Performance

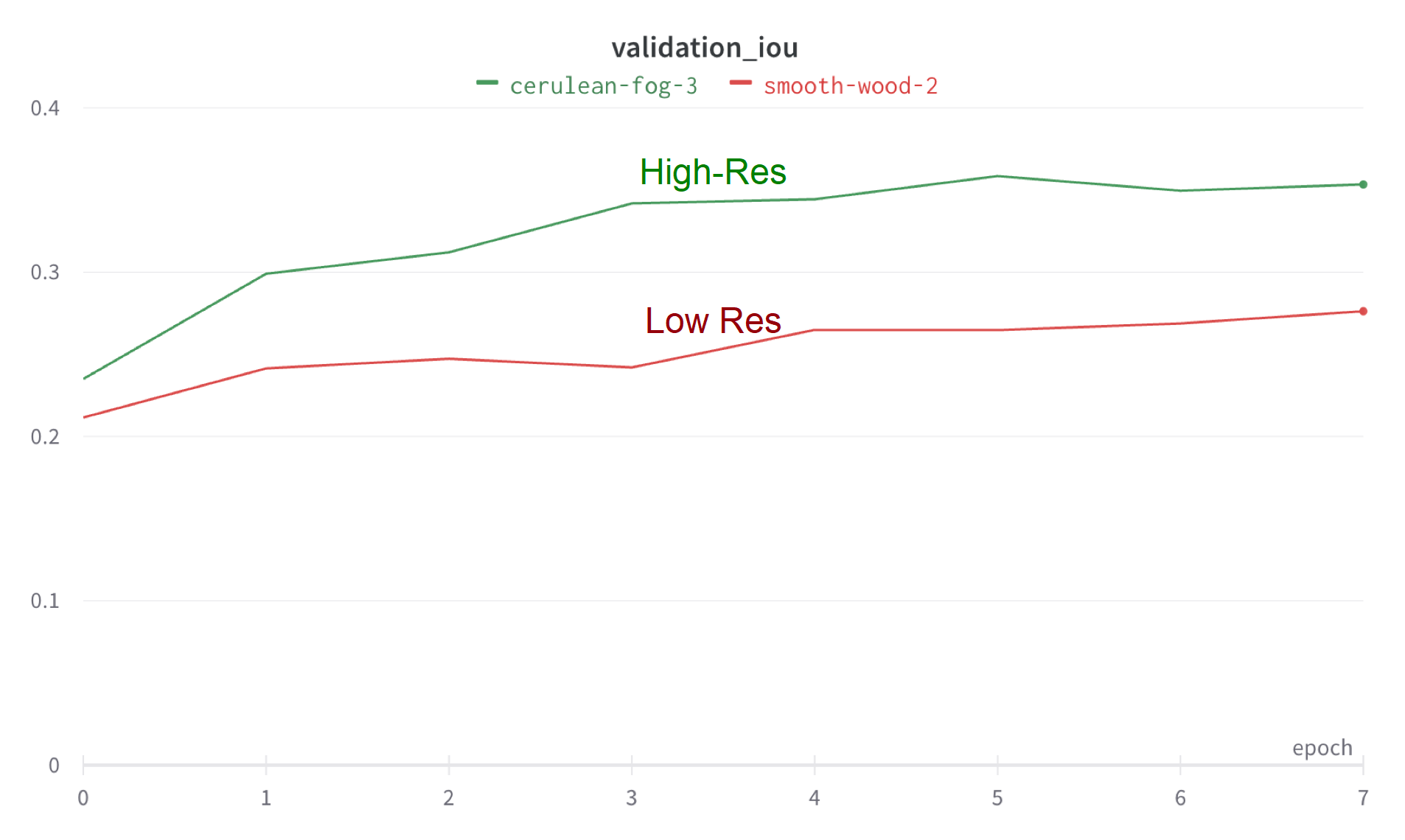

The average IOU of 0.29 achieved in the previous training run is likely unsatisfactory for a deploying a working product. Two potential explanations for the poor performance are:

Despite filtering our samples with tiny objects, the dataset still contains fairly small bounding boxes that are difficult to detect by object detection models

The differences between some objects in a birds-eye view are subtle, even for human perception, such as the

carsandvansin the image below.

One remedy for both problems is to train models higher-resolution images, so let's increase the resolution of the transformation and examine its effect on model performance. In addition to changing the resolution, we must also scale MIN_AREA proportionally to the image area, so that the same bounding boxes are ignored in two training runs.

After retraining the model using the same code above, we observe that the average IOU increased from 0.29 to 0.37, which is substantial given the simple increase in image resolution.

The model is still not production-ready, and further opportunities for improvement are:

Assessing model performance on a per-image basis, which helps identify mislabeled or difficult data. A playbook for this workflow is available here.

Adding random real-world images that do not contain the objects of interest. This helps the model eliminate false positives.

Adding more data to the training set. 3000 images is likely not enough for a high-accuracy model.

Strengthening of transformations that affect image color, blur, warping, and others

Exploring different optimizers, learning rates, and schedulers

Further increasing the transform resolution until diminishing returns are achieved

Notes on GPU Performance

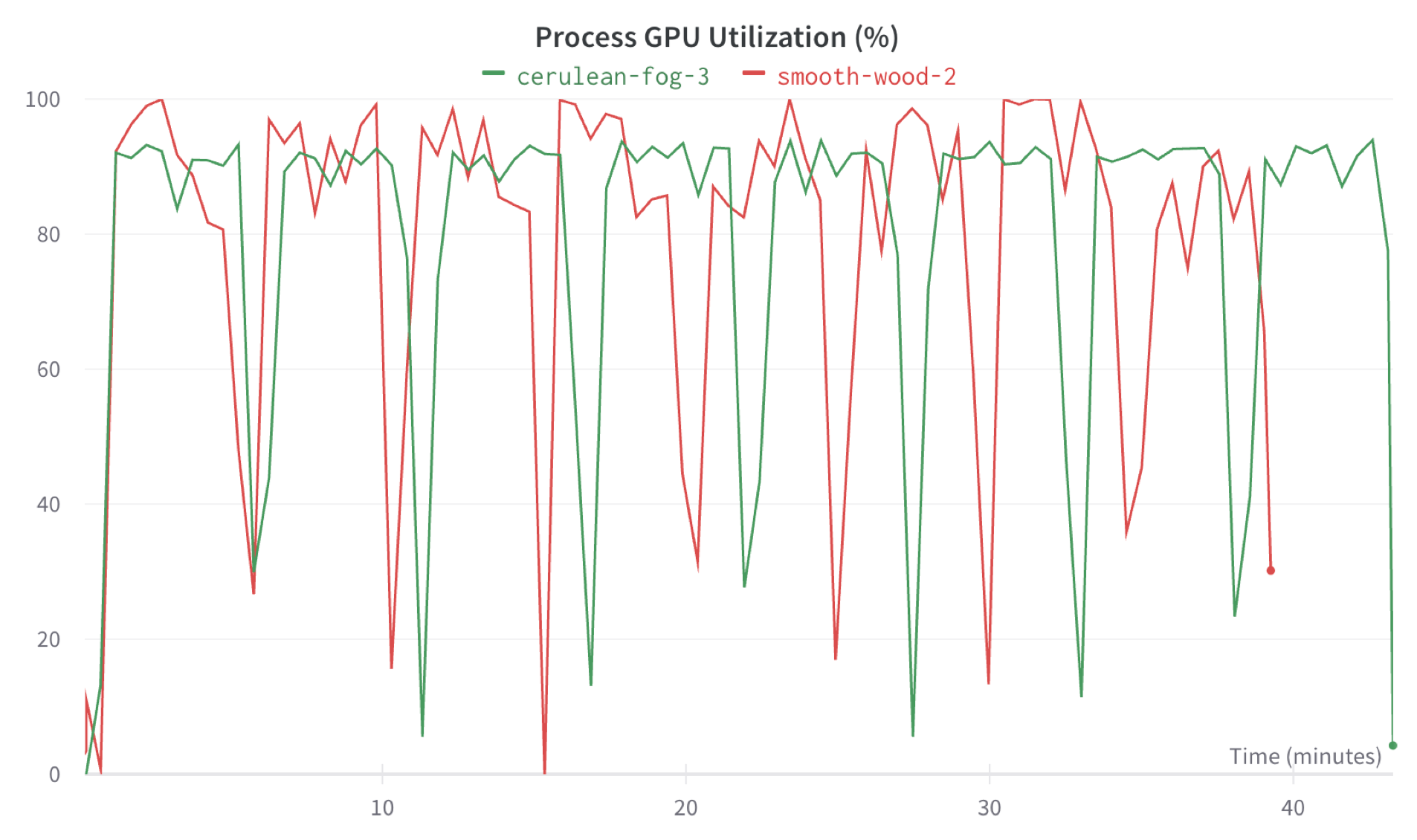

Using W&B automatic logging of CPU and GPU performance, we observe that the GPU utilization for the training runs on this Tesla V100 GPU was approximately 90%, aside from the dips between epochs when the shuffle buffer was filling. Note that the data was streamed from Activeloop storage (not in AWS) to an AWS SageMaker instance. This is made possible by Deep Lake's efficient data format and high-performance dataloader.

Congratulations 🚀. You can now use Activeloop Deep Lake and Weights & Biases to experiment and train models will full reproducibility!

Was this helpful?